Panoramic Unknowns

Panoramic Unknowns

Nicolas Malevé

1. The Caltech’s lab seen by a Kodak DC280

I am looking at a folder of 450 pictures. This folder named faces19991 is what computer vision scientists call a dataset, a collection of images to test and train their algorithms. The pictures have all been shot using the same device, a Kodak DC280, a digital camera aimed at the “keen amateur digital photographer”.[1] If the Kodak DC280 promised a greater integration of the camera within the digital photographic workflow, it was not entirely seamless and required the collaboration of the photographer at various stages. The camera was shipped with a 20 MB memory card. The folder size is 74.8 MB, nearly four times the card’s storage capacity. The photographs have been taken during various sessions between November 1999 and January 2000 and transferred to a computer to empty the card several times. Additionally, if the writing on the card was automatic, it was not entirely transparent. As product reviewer Phil Askey noted, “Operation is quick, although you’re aware that the camera takes quite a while to write out to the CF card (the activity LED indicates when the camera is writing to the card).”[2]

Moving from one storage volume (the CF card) to another (the researcher’s hard drive), files acquire a new name. A look at the file names in the dataset reveals that the dataset is not a mere dump of the successive shooting sessions. By default, the camera follows a generic naming procedure: the photos’ names are composed of a prefix “dcp_” followed by a five digit identifier padded with zeroes (ie. dcp_0001.jpg, dcp_0002.jpg, etc.). The photographer however took the pain of renaming all the pictures following his own convention, he used the prefix “image_” and kept the sequential numbering format (ie. image_0001.jpg, image_002.jpg, etc). The photo’s metadata shows that there are gaps between various series of shots and that the folder’s ordering doesn’t correspond to the image’s capture date. It is therefore difficult to say how far the photographer went into the re-ordering of his images. The ordering of the folder has erased the initial ordering of the device, and some images may have been discarded.

The decision to alter the ordering of the photos becomes clearer when observing the preview of the folder on my computer. My file manager displays the photos as a grid, offering me a near comprehensive view of the set. What stands out from the ensemble is the recurrence of the centered frontal face. The photos are ordered by their content, the people they represent. There is a clear articulation between figure and background, a distribution of what the software will need to detect and what it will have to learn to ignore.[3] To enforce this division, the creator of the data set has annotated the photographs: in a file attached to the photographs, he provides the coordinates of the faces represented in the photos. This foreground/background division pivoting on the subject’s face relates to what my interlocutors, Femke and Jara whose commentaries and writings are woven within this text, are calling a volumetric regime. This expression in our conversations functions as a sensitizing device to the various operations of naturalized volumetric and spatial techniques. I am refraining from defininge it now and will provisionally use the expression to signal, in this situation, the preponderance of an organising pattern (face versus non-face) implying a planar hierarchy. Simultaneously, this first look at the file manager display generates an opposite sensation: the intuition that other forms of continuity are at play in the data set. This complicates what data is supposed to be and the web of relations it is inserted in.

2. Stitching with Hugin

The starting point of this text is to explore this intuition: is there a form of spatial trajectory in the data set and how to attend to it? I have already observed that there was a spatial trajectory inherent in the translation of the files from storage volume to another. This volumetric operation had its own temporality (ie. unloading the camera to take more photos), it brought in its own nomenclature (renaming of the files and its re-ordering). The spatial trajectory I am following here is of another nature. It happens when the files are viewed as photographs, and not as merely as arrays of pixels. It is a trajectory that does not follow the salient features the data set is supposed to register, the frontal face. Instead of apprehending the data set as a collection of faces, I set out to follow the trajectory of the photographer through the lab’s maze. Faces1999 is not spatially unified, it is the intertwining of several spaces: offices, corridors, patio, kitchen ... more importantly, it conveys a sense of provisional continuity and passage. How to know more about this intuition? How to find a process that sets my thoughts in motion? As a beginning, I am attempting to perform what we call a probe at the Institute for Computational Vandalism: pushing a software slightly outside of its boundaries to gain knowledge about the objects it takes for granted.[4] In an attempt to apprehend the spatial continuum, I introduce the data set’s photographs in an image panorama software called Hugin. I know in advance that using these photos as an input for Hugin will push the boundaries of the software’s requirements. The ideal scenario for software such as Hugin is a collection of photographs taken sequentially and its task is to minimise the distortions produced by changes in the point of view. For Hugin, different photos can be aligned and re-projected on the same plane. The software won’t be able to compensate for the incompleteness of the spatial representation, but I am interested to see what it does with the continuities and contiguities, even as partial as they are. I am interested to follow its process and to see where it guides my eyes.

Hugin can function autonomously and look for the points of interest in the photographs that will allow it to stitch the different views together. It can also let the user select these points of view. For this investigation, the probe is made by a manual selection of the points in the backgrounds of the photos. To select these points, I am forced to look for the visual clues connecting the photos. Little by little, I reconstruct two bookshelves forming a corner. Then, elements in the pictures become eventful. Using posters on the wall, I discover a door opening onto an office with a window with a view onto a patio. Comparing the orientation of the posters, I realize I am looking at different pictures of the same door. I can see a hand, probably of a person sitting in front of a computer. As someone shuts the door, the hand disappears again. One day later, the seat is empty, books have been rearranged on the shelves, stacks of papers have appeared on a desk. In two months, the backgrounds slowly moves, evolves. On the other side of the shelves, there is a big white cupboard with an opening through which one can see a slide projector. Following that direction, is a corridor. The corridor wall is covered with posters announcing computer vision conferences and competitions for students. There is also a selection of photographs representing a pool party that helps me “articulate” several photographs together. Six pictures show men in a pool. Next to these, a large photo of a man laying down on the grass in natural light, and is vaguely reminiscent of an impressionist painting. Workers partying outside of the workplace pictured on the workplace’s walls.

At regular intervals, I press a button labeled “stitch” in the panorama software and Hugin generates for me a composite image. Hugin does not merely overlay the photos. It attempts to correct the perspectival distortions, smooth out the lighting contrasts, resolve exposure conflicts and blend the overlapping photos. When images are added to the panorama, the frontal faces are gradually faded and the background becomes salient. As a result, the background is transformed. Individual objects loose their legibility, books titles fade. What becomes apparent is the rhythm, the separations and the separators, the partition of space. The material support for classification takes over its content: library labels, colors of covers and book edges become prominent.

Finding a poster in a photo, then seeing it in another photo, this time next to a door knob, then in yet another that is half masked by another poster makes me go through the photos back and forth many times. After a while, my awareness of the limits of the corpus of photos grows. It grows enough to have an incipient sensation of a place out of the fragmentary perceptions. And concomitantly, a sense of the missing pictures, missing from a whole that is nearly tangible. With a sense that their absence can be perhaps compensated. Little by little, a traversal becomes possible for me. Here, however Hugin and I part ways. Hugin gives up on the overwhelming task of resolving all these views into a coherent perspective. Its attempt to recover the contradictory perspectives ends up in a flamboyant spiraling outburst. Whilst Hugin attempts to close the space upon a spherical projection, the tedious work of finding connecting points in the photos gave me another sensation of the space, passage by passage, abandoning the idea of a point of view that would offer an overarching perspective. Like how a blind person touching the contiguous surface can find their way through the maze, I can intuit continuities, contiguities, and spatial proximities that open a volume onto one another. The dataset opens up a world with depth. There is a body circulating in that space, the photos are the product of this circulation.

3. Accidental ethnography

As I mentioned at the beginning of this text, Griffin this folder of photographs is what computer vision engineers call a dataset: a collection of digital photographs that developers use as material to test and train their algorithms on. Using the same dataset allows different developers to compare their work. The notice that comes along with the photographs gives a bit more information about the purpose of this image collection. The notice, a document named README, states:

Frontal face dataset. Collected by Markus Weber at California Institute of Technology.

450 face images. 896 x 592 pixels. Jpeg format. 27 or so unique people under with different lighting/expressions/backgrounds.

ImageData.mat is a Matlab file containing the variable SubDir_Data which is an 8 x 450 matrix. Each column of this matrix hold the coordinates of the bike within the image, in the form:

[x_bot_left y_bot_left x_top_left y_top_left ... x_top_right y_top_right x_bot_right y_bot_right]

------------

R. Fergus 15/02/03

As announced in the first line, Faces1999 contains pictures of people photographed frontally. The collection contains mainly close-ups of faces. To a lesser degree, it contains photographs of people in medium shots. And to an even less degree, it contains three painted silhouettes of famous actors, like of Buster Keaton. But my trajectory with Hugin, my apprehension of stitches and passages leads me elsewhere than the faces. I am learning to move across the dataset. This movement is not made of a series of discrete steps, each positioning me in front of a face (frontal faces) but a transversal displacement. It teaches me to observe textures and separators, grids, shelves, doors, it brings me into an accidental ethnography of the lab surfaces.

Most of the portraits are taken in the same office environment. In the background, I can see shelves stacked with programming books, walls adorned with a selection of holiday pictures, an office kitchen, several white boards covered with mathematical notations, news boards with invitations to conferences, presentations, parties, and several files extracted from a policy document, a first aid kit next to a box of Nescafé, a slide projector locked in a cupboard.

Looking at the books on display on the different shelves, I play with the idea of reconstructing the lab's software ecosystem. Software for mathematics and statistics: thick volumes of Matlab and Matlab related manuals (like Simulink), general topics like vector calculus, applied functional analysis, signal processing, digital systems engineering, systems programming, concurrent programming, specific algorithms (active contours, face and gesture recognition, the EM algorithm and extensions) or generic ones (a volume on sorting and searching, cognition and neural networks), low level programming languages Turbo C/C++, Visual C++ and Numerical Recipes in C. Heavily implanted in math more than in language. The software ecosystem also includes resources about data visualisation and computer graphics more generally (the display of quantitative information, Claris Draw, Draw 8, OpenGL) as well as office related programmes (MS Office, Microsoft NT). There are various degrees of abstraction on display. Theory and software manuals, journals, introductions to languages and specialized literature on a topic. Book titles ending with the word theory or ending with the word “programming”, “elementary” or “advanced”. Design versus recipe. There is a mix of theoretical and applied research. The shelves contain more than software documentation: an electronic components catalogue and a book by John Le Carré are sitting side by side. It Ironically reminds us that science is not made with science only, neither software by code exclusively.

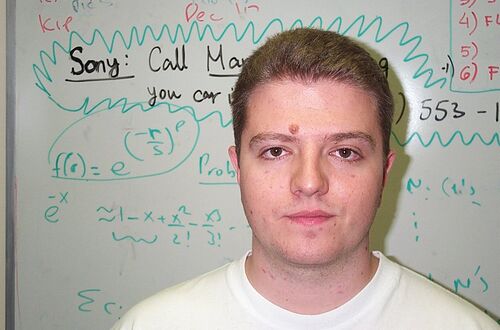

Books are stacked. Each book claiming its domain. Each shelf adding a new segment to the wall. Continuing my discovery of spatial continuities, I turn my attention to surfaces with a more conjunctive quality. There is a sense of conversation happening in the backgrounds. The backgrounds are densely covered with inscriptions of different sorts. They are also overlaid by commentaries underlying the mixed nature of research activity. Work regulation documents (a summary of the Employee Polygraph Protection Act), staff emails, address directories, a map of the building, invitations to conferences and parties, job ads, administrative announcements, a calendar page for October 1999, all suggest that more than code and mathematics are happening in this environment. These surfaces are calling their readers out: bureaucratic injunctions, interpellations, invitations using the language of advertising. On a door, a sign reads “Please do not disturb”. A note signed Jean-Yves insists “Please do NOT put your fingers on the screen. Thanks.” There are networks of colleagues in the lab and beyond. These signs are testament to activities they try to regulate: people open doors uninvited and show each other things on screens leaving finger traces. But the sense of intertwining of the ongoing social activity and the work of knowledge production is nowhere more present than in the picture of a whiteboard where complex mathematical equations cohabit with a note partially masked by a frontal face: Sony Call Ma... your car is … 553-1. The same surface of inscription is used for both sketching the outline of an idea and internal communication.

Approaching the dataset this way offers an alternative reading of the manners in which this lab of computer vision represents itself and to others what its work consists of. The emic narrative doesn’t offer a mere definition of the members activity. It comes with its own continuities. One such continuity is the dataset’s temporal inscription into a narrative of technical progress that results in a comparison with the current development of technology. I realize the difficulty to resist it. How much I myself mentally comparei the Kodak camera to the devices I am using. I take most of m Griffin y photos with a phone. My phone’s memory card is 10 gigabytes whereas Kodak proudly advertised a 20MB card for its DC280 model. The dataset’s size pales in comparison to current standards (a state-of-the-art dataset as UMDFaces includes 367,000 face annotations[5] and VGGFace2 provides 3,3 million face images downloaded from Google Image Search).[6] The question of progress here is problematic in that it tells a story of continuity that is recurrent in books, manuals and blogs related to AI and machine learning. This story can be sketched as: “Back in the days, hardware was limited, data was limited, then came the data explosion and now we can make neural networks properly.”[7] Whilst this narrative is not inherently baseless, it makes it difficult to attend to the specificity of what this dataset is and how it relates to larger networks of operation. And what can be learned from it. In a narrative of progress, it is defined by what it is no longer (it is not defined by the scarcity of the digital photograph anymore) and by what it will become (faces1999 is like a contemporary dataset, but smaller). The dataset is understood through a simple narrative of volumetric evolution where the exponential increase of storage volumes rhymes with technological improvement. Then it is easy to be caught in a discourse that treats the form of its photographic elaboration as an in-between. Already digital but not yet networked, post-analogue but pre-Flickr.

4. Photography and its regular objects

So how to attend to its photographic elaboration? What are the devices and the organisation of labour necessary to produce such a thing as Faces1999? The photographic practice of the Caltech engineers matters more than it may seem. Photography in a dataset such as this is a leveler. It is the device through whichdisparate fragments that make up the visual world can be compared. Photography is used as a tool for representation and as a tool to regularise data objects. The regularization of scientific objects opens the door to the representation and naturalization of cultural choices. It is representationally active. It involves the encoding of gender binaries, racial sorting, spatial delineation (what happens indoors and outdoors in the dataset). Who takes the photo, who is the subject? Who is included and who is excluded? The photographer is a member of the community. In some way, he[8] is the measure of the dataset. It is a dataset at his scale. To move through the dataset is to move through his spatial scale, his surroundings. Where he can easily move and recruit people, he has bonds with the “subjects”. He can ask them “come with me”, “please smile” to gather facial expressions. Following the photographer, we move from the lab to the family circle. About fifty photos interspersed with the lab photos represent relatives of the researchers in their interior spaces. While it is difficult to say for certain how close they are, they depict women and children in a house interior. It is his world, ready to offer itself to his camera.

Further, to use Karen Barad’s vocabulary, the regularization performs an agential cut: it enacts entities with agency and by doing so, it enforces a division of labor.[9] My characterization of the photographer and his subject has until now has remained narrow. The subjects do not simply respond to the photographer, but they respond to an assemblage comprising at a minimum the photographer, camera, familiar space, lighting condition, and storage volumes. To take a photo means more a than a transaction between a person seeing and a person seen. Proximity here does not translate smoothly to intimacy. In some sense, to regularize his objects, as a photographer, the dataset maker must be like everyone else. The photograph must be at some level interchangeable with those of the “regular photographer”. The procedure to acquire the photographs of faces1999 is not defined. Yet regularization and normalization are at work. The regulative and normalizing functions of the digital camera, its ability to adapt, its distribution of competences, its segmentation of space are operating. But also its conventions, its acceptability. The photographic device here works as a soft ruler that adjusts to the fluctuating contours of the objects it measures.

Its objects are not simply the faces of the people in front of the photographer. The dataset maker’s priority is not to ensure indexicality. He is less seeking to represent the faces as if they were things “out there” in the world than trying to model a form of mediation. The approach of the faces1999 researchers is not one of direct mediation where the camera simply is considered a transparent window to the world. If it were the case, the researchers would have removed all the “artefactual” photographs wherein the mediation of the camera is explicit: where the camera blurs or outright cancels the representation of the frontal face. What it models instead is an average photographic output. It does not model the frontal face, it models the frontal face as mediated by the practice of amateur photography. In this sense, it bears little relation with the tradition of scientific photography that seeks to transparently address its object. To capture the frontal face as mediated by vernacular photography, the computer scientist doesn’t need to work hard to remove the artifactuality of its representation. He needs to work as little as possible, he needs to let himself guided by a practice external to his field, to let vernacular photography infiltrate his discipline.

The dataset maker internalizes a common photographic practice. For this, he must be a particular kind of functionary of the camera as Flusser would have it.[10] He needs to produce a certain level of entropy in the program of the camera. The camera’s presets are determined to produce predictable photographs. The use of the flash, the speed, the aperture are controlled by the camera to keep the result within certain aesthetics norms. The regularization therefore implies a certain dance with the kind of behavior the photographer is expected to adopt. If the dataset maker doesn’t interfere with the regulatory functions of the camera, the device may well regularize too much of the dataset and therefore move away from the variations that one can find in amateur photo albums. The dataset maker must therefore trick the camera to make enough “bad” photos as would normally happen over the course of a long period of shooting. The flash must not fire at times even when the visibility in the foreground is low. This requires the circumvention of the default camera behavior in order to provoke an accident bound to happen over time. A certain amount of photos must be taken with the subject off-centre. Faces must be occasionally out of focus. And when an accident happens by chance, it is kept in the dataset. However, these accidents cannot exceed a certain threshold: images need to remain generic. The dataset maker explores the thin range of variation in the camera’s default mode that corresponds to the mainstream use of the device. The researcher does not systematically explore all the parameters. They introduce a certain wavering in its regularities. A measured amount of bumps and lumps. A homeopathic dose of accidents. At each moment, there is a perspective, a trajectory that inflects the way the image is taken. It is never only a representation, it always anticipates variations and redundancies, it always anticipates its ultimate stabilization as data. The identification of exceptions and the inclusion of accidents is part of the elaboration of the rules. The dataset maker cannot afford to forget that the software does not need to learn to detect faces in the abstract. It needs to learn to detect faces as they are made visible within a specific practice of photography and internalized to some degree by the camera.

5. Volumetric regimes

Everything I have written until now has been the result of several hours of looking at the faces1999 images. I have done it through various means. In a photo gallery, through an Exif reader program, via custom code, and through the panorama software Hugin. However, nowhere in the README or the website where the dataset can be downloaded, can an explicit invitation to look at the photos be found. The README refers to one particular use. The areas of interest compiled in the Matlab file makes clear that the privileged access to the dataset is through programs that treat the images as matrices of numbers and process them as such. It doesn’t mean a dataset such as faces1999 cannot be treated as an object to be investigated visually. 27 faces is an amount that one person can process without too much trouble. One can easily differentiate them and remember most of them. For the photographer and the person who annotated the dataset, traced the bounding boxes around the faces, the sense of familiarity was even stronger. They were workmates or even family. The dataset maker could be present at all stages in the creation of the dataset: he would select the people, the backgrounds, press the shutter, assemble and rename the pictures, trace the bounding boxes, write the readme, compress the files and upload them on the website. Even if Hugin could not satisfactorily resolve the juxtaposition of points of view, its failure still hinted at a potential panorama ensuring, a continuity through the various takes. There was at least a possibility of an overview, of grasping a totality.

This takes me back to the question of faces1999’s place in a narrative of technological progress. In such narrative, it plays a minor role and should be forgotten. It is not a standard reference of the field and its size pales in comparison to current standards. However, my aim with this text is to insist that datasets in computer vision should not be treated as mere collections of data points or representations that can be simply compared quantitatively. They articulate different dimensions and distances. If the photos cut the lab into pieces, to assemble faces1999 implies a potential stitching of these fragments. This created various virtual pathways through the collection that mobilized conjunctive surfaces, walls covered of instructions and recursive openings (door opening on an office with a window opening on a patio). There were passageways connecting the lab to the home and back. There was cohesion if not coherence. At the invitation of Jara and Femke, taking the idea of a volumetric regime as a device to think together the sequencing of points of views, the naturalization of the opposition between face and background, the segmentations, but also the stitches, the passageways, the conjunctive surfaces, the storage volumes (of the brand new digital camera and the compressed archive) through which the dataset is distributed, I have words to apprehend better the singularity of faces1999. Faces1999 is not a small version of a contemporary dataset. A quantitative change reaches out into other dimensions, another space, another coherence, another division of labour and another photographic practice. Another volumetric regime.

Acknowledging its singularity does not mean to turn faces1999 into a nostalgic icon. It matters because recognizing its volumetric regime changes the questions that can be asked of current datasets. Instead of asking how large they are, how much they have evolved, I may be asking to which volumetric regime they belong (and they help enact in return). Which means a flurry of new questions need to be raised: what are the dataset’s passageways? How do they split and stitch? What are its conjunctive surfaces? What is the division of labour that subtends it? How is the photographic apparatus involved in the regularization of their objects? And what counts as photographic apparatus in this operation?

Asking these questions to datasets such as MegaFace, Labelled Faces in the wild or Google facial expression comparison would immediately signal a different volumetric regime that cannot be reduced to a quantitative increase. The computer scientist once amateur photographer becomes photo-curator (the photos are sourced from search engines rather than produced by the dataset maker). The conjunctive surfaces that connect administrative guidelines and mathematical formulas would not be represented in the photos backgrounds but built into the contract and transactions of the platform of annotation that recruits the thousands of workers necessary to label the images (instead of the lone packager of faces1999). Their passageways should not be sought in the depicted spaces in which the faces appear, but in the itineraries that these photos have followed online. And however we would like to qualify their cohesion if not coherence, we should not look for a panorama, even incomplete and fragmented, but for other modes of stitching and splitting, of combining their storage volumes and conjunctive surfaces.

Notes

- ↑ P. Askey, “Kodak DC280 Review,” DPReview, accessed November 19, 2020.

- ↑ Askey, “Kodak DC280 Review,” 6.

- ↑ In an article related to the dataset Caltech 256, Pietro Perona, pictured in faces1999, uses the word clutter to describe what is not the main object of the photo. G. Griffin, A. Holub, and P. Perona, “Caltech-256 Object Category Dataset,” CalTech Report, 2007.

- ↑ Geoff Cox, Nicolas Malevé. and Michael Murtaugh, “Archiving the Data Body: Human and Nonhuman Agency in the Documents of Erkki Kurenniemi,” in Writing and UnWriting Media (Art) History: Erkki Kurenniemi in 2048 eds. Joasia Krysa and Jussi Parikka (Cambridge MA; MIT Press, 2015), 125-142.

- ↑ A. Bansal et al., "UMDFaces: An Annotated Face Dataset for Training Deep Networks," CoRR, (2016), abs/1611.01484. Available from: http://arxiv.org/abs/1611.01484.

- ↑ Q. Coa et al., "VGGFace2: {A} dataset for recognising faces across pose and age, CoRR, (2017), abs/1710.08092. Available from: http://arxiv.org/abs/1710.08092.

- ↑ For an example of such a narrative, see A. Kurenkov, “A ‘Brief’ History of Neural Nets and Deep Learning, Part 1,” accessed January 2, 2017, http://www.andreykurenkov.com/writing/a-brief-history-of-neural-nets-and-deep-learning

- ↑ On the soft versus hard rule, see Lorraine Daston, “Algorithms Before Computers,” Simpson Center for the Humanities UW (2017), accessed March 25, 2020, https://www.youtube.com/watch?v=pqoSMWnWTwA

- ↑ Karen Barad, “Meeting the Universe Halfway: Realism and Social Constructivism without Contradiction,” in Nelson, L. H. and Nelson, J. (eds.) Feminism, Science, and the Philosophy of Science. Dordrecht: Springer Netherlands, 1996, 161-194.

- ↑ Vilem Flusser, Towards a philosophy of photography (London: Reaktion books, 2000).